The following article was first published as a chapter in “Perspectives on Impact: Leading Voices On Making Systemic Change in the Twenty-First Century” in 2019. View and download a printable PDF version of this chapter.

Designing prize competitions for outcome

Following the 2007-2008 American recession, innovation was the name of the game. New job titles were minted, centers of excellence were formed, and corporate garages quickly dotted the landscape. At the time, Luminary Labs was working with Sanofi U.S., a pharmaceutical company, to identify viable ‘beyond-the-pill’ solutions that utilized emerging technology, including the rapid increase in smartphone ownership, to support people living with diabetes. The 2010 introduction of health care reform in the United States, which encouraged the industry to strive for the ‘triple aim’ of optimizing the patient experience, health outcomes, and cost, amplified the urgency of this request.

In response, we developed the inaugural Sanofi U.S. Data Design Diabetes Open Innovation Challenge, which called on designers, developers, data scientists, and the world at large to submit solutions to improve the outcome or experience of people living with diabetes. The most innovative and human-centered concepts would be awarded a prize purse of $220,000 with no strings attached; the finalists and winners could take the prize money without any further obligations to the sponsor, who sought to stimulate the marketplace and identify potential partners. In what had historically been a closed industry, this was a fresh and new model: it was good for the patient, the innovator, and the company.

Prize competitions like Data Design Diabetes belong to a field known as open innovation. The term was coined by Henry Chesbrough of The University of California, Berkeley, who describes open innovation as “a distributed innovation process based on purposively managed knowledge flows across organizational boundaries, using pecuniary and nonpecuniary mechanisms in line with the organization’s business model.”

Traditionally, organizations have created closed environments in which to execute against particular aims. They compete for talent, invest in research and development, create intellectual property, and build a fortress around the entire thing. But what if organizations accepted that the best ideas might not come from within their four walls? Or that the most novel solutions might live at the fringes of an industry’s or field’s ecosystem? Or that partnership is the path to viability? This is precisely the kind of thinking behind open innovation: when organizations open up, they can both accelerate the identification of novel ideas and create tangible value for themselves, and the world at large.

Open innovation is not just for commercial businesses that aim to do good and do well. Both government and nonprofit organizations have long embraced open innovation to address some of the world’s most pressing problems. In the eighteenth century, Britain offered a significant prize purse for advancements in seafaring navigation, and Napoleon’s investment in a competition led to innovation in food preservation. More recently, The Defense Advanced Research Projects Agency’s 2004 Grand Challenge ignited a decade of progress in autonomous vehicle technology. In 2016, the Robert Wood Johnson Foundation funded The Mood Challenge for ResearchKit™ — a competition designed and produced by Luminary Labs — to further the understanding of mood. And, in 2017, the MacArthur Foundation issued a competition for a $100 million grant to fund a single proposal that promised real and measurable progress in solving a critical problem of our time (this prize went to Sesame Workshop, one of the other contributors in this book, which, in partnership with the International Rescue Committee, created programming for refugee children).

To be sure, open innovation is not the only tool in the social impact toolbox. And when an organization needs to be prescriptive or has a preconceived idea of what its ideal solution looks like, a more traditional procurement method may be preferred. But open innovation is particularly helpful when one is receptive to a wide array of solutions and willing to accept that some will completely miss the mark.

At Luminary Labs, we’re focused on the problems that matter — from the future of health and science to the future of work and smart cities. Over the past eight years, we have found that open innovation initiatives, and incentive prize competitions in particular, reap the same benefits for impact as they do for commercial aims: by tapping the power of the crowd, organizations can identify solutions that are both novel and viable. Defining the problem, investing in design, and optimizing for outcome are key to making open innovation work.

Hackathon or challenge?

Organizations practice open innovation in many ways, and — thanks to big tech — hackathons, sprint-like events that last a day or a weekend, have become standard fare across a number of industries. The first documented hackathon was in 1999, and Facebook has promoted ‘epic, all night’ hackathons as a key element of its company’s culture. But where hackathons are valued for the ability to stimulate early thinking, identify talent, and produce rough prototypes, open innovation challenges, also known as incentive prize competitions (or simply prizes), unfold over months, or even years, often with the aim of exceeding a threshold or proving real world viability. Multistage challenges, also known as down-select challenges, do this by narrowing the pool of entrants at each round of judging, culminating in one or more winners.

Designing for outcome

So what makes for a good impact prize competition? We believe that there are three contributing factors: first, clearly defining the problem to be solved; second, investing in challenge design; and third, providing solvers with the resources required to close the gap between concept and viability.

Defining the problem

In an era in which people are seeking the ‘iPhone of health care’ or the ‘Uber for homelessness,’ it is easy to gloss over the problem at hand. Rather than putting the spotlight on prescriptive solutions or novel technologies, successful prize competitions commence with a clear and concise definition of the problem to be solved, as well as the piece or pieces of the problem that they aim to address through an open innovation mechanism. Defining a problem too broadly can make it difficult to obtain actionable results. Too narrow a definition can limit innovation within a prescriptive range of approaches. An ideal problem sits somewhere in the middle, where it has the opportunity to stimulate and expand a market.

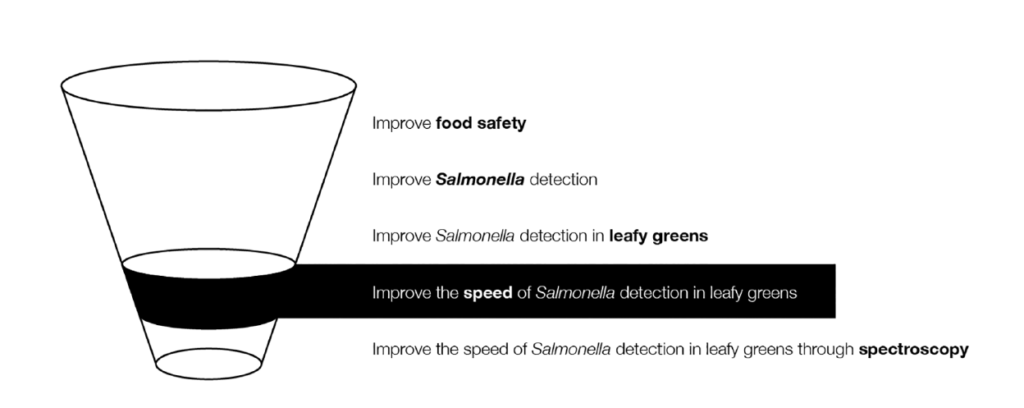

For example, the U.S. Food and Drug Administration (FDA) Office of Foods and Veterinary Medicine (OFVM) sought to improve food safety through an incentive prize competition produced by Luminary Labs in 2014. While the American food supply is among the safest in the world, the Centers for Disease Control and Prevention (CDC) estimates that one in six Americans are sickened by foodborne illness annually. The overall negative economic impact of foodborne illness in the United States, including medical costs, quality-of-life losses, lost productivity, and lost life expectancy, is estimated to be as much as $77 billion per year.

In our early conversations, the FDA noted that it was already connecting with food safety innovators on a regular basis. The purpose of the competition, therefore, was to identify new approaches beyond the known solver base. We set out to design a ‘Goldilocks’ call to action — one that wasn’t so broad that it would elicit intangible solutions, and yet not so narrow that it would only appeal to insiders. Striking a balance would require us to be clear on which part of the food safety problem to address. Was there a preferred pathogen? In which produce categories would we focus? Where in the food production system were we most interested? Was there a trade-off regarding acceptable thresholds, such as speed or accuracy? And would we consider novel technology — such as spectroscopy or metagenomics — a bonus or a requirement?

Early in the process, we settled on the pathogen Salmonella and the speed at which it can be detected. Salmonella causes over one million illnesses in the United States every year, with about 23,000 hospitalizations and 450 deaths, and is particularly hard to detect. According to David G. White, PhD, FDA OFVM’s chief science officer and research director:

“Detecting low levels of Salmonella in produce can be like finding a needle in a haystack: difficult, expensive and time-consuming. Even a simple tomato might have up to a billion surface bacteria that do not cause harm to humans. Quickly detecting just the few types of bacteria that do cause harm, like Salmonella, is a daunting task.”[3]

To further narrow the problem, we focused on produce — which is responsible for nearly half of foodborne illnesses and almost a quarter of foodborne-related deaths — and specifically, leafy greens, with an emphasis on sample preparation and/or enrichment in the testing process. We now had a problem to solve and a call to action.

At this point, we took a step back and asked the FDA what would constitute a ‘big win.’ They noted that their internal teams were either tracking or researching a number of revolutionary approaches such as metagenomics and quantum detection, as well as new applications of existing technologies, such as spectroscopy. As a thought exercise, we considered a more specific call to action that included this technological focus: “to improve the speed of Salmonella detection in leafy greens through spectroscopy.” Ultimately, however, we determined that narrowing the areas of technology would be too prescriptive and would reduce our ability to tap into a broad and diverse solver base. As a result, the challenge criteria noted that FDA was most interested in solutions that made use of revolutionary approaches or new approaches for existing technologies, but did not make this a formal requirement (Figure 9.1).

Investing in challenge design

In addition to the clarity of the problem statement definition, the level of investment in challenge design is a good indicator of how successful the prize competition will be. The market is flooded with platforms that aim to democratize open innovation, and better access to tools and crowds is a good thing. But in the absence of challenge design, even the strongest problem statement is not guaranteed to meet its objectives.

Thoughtful challenge design first addresses why the problem has not yet been solved. Some problems are hard nuts to crack, expensive, or even dangerous to solve. In other cases, the solver base might be unaware of the problem, uninterested in the problem, or unaware that their current work has applicability in other fields. In rare situations, there are simply not enough solvers with the required expertise.

The answers to this question are then balanced with incentives. People enter prize competitions for a variety of reasons. A common framework, inspired by the age of exploration and popularized by the U.S. Prize Authority, is Good, Glory, Guts, and Gold. Good speaks to the intrinsic motivation, glory to external validation, guts to the challenge itself, and gold to resources (both monetary and non-monetary) offered as an incentive. Any given challenge might have one or more primary motivators, and solvers tend to be rational, weighing the benefits of allocating their time and energy in pursuit of a prize.

The challenge design itself can serve as an additional motivator — or deterrent — to participation. For example, onerous criteria may result in a smaller pool of submissions. This might be acceptable to the sponsor, but if the sponsor is seeking a large number of solutions from a cross-section of solvers, it would be wise to reduce the barriers to entry or reconsider the challenge timeline and incentives in order to attract more solvers. Intellectual property (IP) stance is also a hot button issue for solvers. While many prize competitions allow the innovator to keep the intellectual property, sponsors frequently include protections against future claims or a license to the solution and its derivatives. Solvers weigh these trade-offs against the prize purse. If the purse is too small, an early stage team might feel that it has more to gain by not entering.

Numerous challenge design questions surfaced during the research phase for the U.S. Department of Education EdSim Challenge, which called upon augmented reality (AR), virtual reality (VR), and gaming developers to produce educational simulations that would strengthen academic, technical, and employability skills. In 2012, the gaming industry had overtaken the movie industry, earning $79 billion globally. But despite growth in consumer adoption, especially among youth, there was limited innovation in development of simulations for the K-12 and postsecondary education markets. The growing base of experts in AR, VR, and immersive game technologies either did not recognize the market opportunity or found that the commercial gaming opportunity was far more lucrative.

Simulation development, even at prototype stage, is a very costly endeavor — to the tune of over $1 million. We quickly discovered that to stimulate interest in developing education simulations, we would need to communicate the market opportunity (today students learn from textbooks, but the future will include simulations), the cash prize purse would need to be significant enough to offset the costs of development, and nonmonetary incentives would need to have real value to participants.

While traditional research and intellectual property searches are helpful tools to understand what has been done, engaging with real solvers is the best way to understand the combination of incentives — Good, Glory, Guts, Gold — to inspire participation. Early in the process, we had gained input and buy-in from influential stakeholders, including educators, the game industry, academia, big tech, and hiring organizations, through a formal convening, expert panels, and public feedback. In addition to providing valuable information, these conversations fostered relationships that came to bear later in the program; adding to the government-provided $680,000 prize purse, respected organizations such as IBM, Microsoft, Oculus, and Samsung provided both software and gear, including recently released VR headsets and free cloud services. These resources sent a clear signal to the market that there was an opportunity to transform learning through commercial game-quality simulations.

But what exactly were we asking participants to submit? And what would the parameters be for the winning solution? The design and development of a working simulation has many phases, and while the prize purse was significant, solvers made it clear that the requirements of the first-round submission would need to be achievable enough to merit the effort.

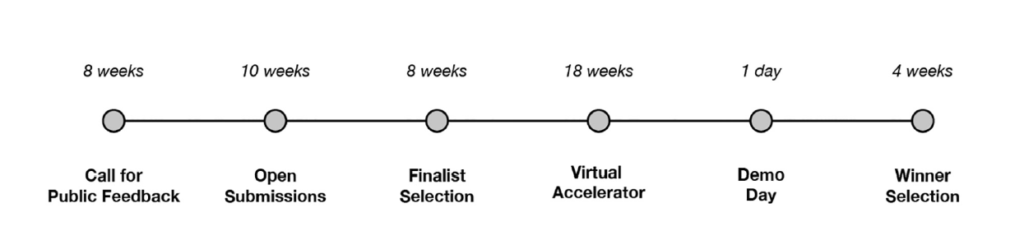

We designed the flow to include two rounds of judging, each requiring different degrees of fidelity (see Figure 9.2). The open submissions round would seek a detailed concept and design, including a description of the concept, simulation experience, and learning objectives; development plan and technical consideration; early thinking around implementation and scaling; and storyboards or visual mock-ups. During this round, the jury would narrow the pool to five finalists who would each receive $50,000, hardware and software from the sponsors, and access to a virtual accelerator to support development of a playable prototype to be presented at a demo day. The second and final round of judging would require detailed plans, including a description of the learning outcomes and assessment metrics; interoperability considerations and open source elements, and a playable prototype. Following a demo day, the grand prize winner would take home $430,000.

In September of 2017, the jury, which hailed from organizations including Ford, Microsoft, and Girls Who Code, had the chance to immerse themselves in fully functional simulations during a demo and pitch day. From a hands-on visit to the operating room to an exploration of astronomy concepts, the participants explored a wide range of educational experiences that teach career and technical skills. The winner was Osso VR, a surgical training platform that enables users to practice cutting-edge techniques through realistic, hands-on simulations, bridging the gap between career exploration and career preparation. By late 2018, Osso VR’s team had raised $2.4 million in capital and launched a partnership with eight American medical residency programs including those at Columbia, UCLA, Harvard, and Vanderbilt.

Challenge design is both an art and a science that requires balancing the interests of the sponsor and the solver through motivation and incentives. When these are in harmony, they inform a suite of highly interrelated elements — including the call to action, criteria, timeline, terms and conditions, intellectual property stance, prize amounts and structure, submission form, jury selection, and judging rubrics — to support the overarching goal and desired outcome.

Closing the gap

Most startups fail, and many concepts never make it past paper. This is precisely why venture capitalists place bets on teams with the ability to ‘close the gap’ between a good idea and a commercializable product or service. The same can be said of open innovation: a frequent complaint is that the solvers’ concepts often die on the vine. Challenges that solicit ideas are nice, but making those ideas real is always preferable. This is particularly true for impact challenges. It’s one thing to fail to meet a commercial aim. Failure to meet a humanitarian or societal goal can result in entirely different consequences.

Back in 2011, when we launched our first challenge, most prize competitions were simply offering money for ideas. Our client, however, was in search of solutions that could be commercialized in the near term. We developed a multistage challenge methodology that shepherds the strongest solutions through an iterative process, ultimately closing the gap between the concept and real-world viability.

To do this, we borrowed the best practices from two rising trends (at that time) in business and adapted them to fit the open innovation challenge format. We looked to traditional tech accelerators that offered resources in the form of seed money, education, and mentorship, and modified their typical structure so that founders wouldn’t be required to move across the country or give up equity. We also drew from design thinking methodologies to firmly assert that the innovation needed to be human-centered, and we added educational modules that helped turn concepts into tangible and market-viable products and services. Interestingly, these two circles — tech accelerators and design thinking methodologies — did not yet intersect. We combined empathy building, subject matter knowledge, rapid prototyping, and business modeling to support iteration. And it worked; the winning team pivoted its solution mid-challenge after engaging with end-users and participating in rapid prototyping exercises. They have since raised more than $25 million in capital, recruited over 30 enterprise clients, and launched their service nationwide.

When designing the Alexa Diabetes Challenge, a $250,000, multistage prize competition sponsored by Merck & Co., Inc., Kenilworth, New Jersey, U.S.A in collaboration with Amazon Web Services (AWS), it occurred to us that nearly every solver would have a significant technical gap, as voice-enabled technology was in its infancy and health applications were few and far between. During this time, U.S. smart speaker sales had doubled and nearly half of all Americans had used a voice assistant. And yet, the majority of early applications were for entertainment purposes. The challenge hypothesized that voice assistant uses would evolve from managing music playlists to managing life, including supporting people newly diagnosed with Type 2 diabetes.

The challenge received nearly 100 submissions from a broad cross-section of solvers — including academic research teams, individuals, startups, and even public companies. We needed to ensure that the finalist teams had the skills, especially the ones outside their expertise, to effectively produce viable solutions. To do so, each of the five finalist teams received $25,000, promotional credits from Amazon Web Services, and access to the virtual accelerator, which included an in-person boot camp at Amazon’s Seattle headquarters. The boot camp featured a deep focus on the patient experience and behavioral economics, with sessions led by experts in diabetes education and health care innovation as well as Type 2 diabetes patients themselves. The finalists also worked directly with the AWS team to explore how they could harness Amazon services for transformative health care solutions. Last but not least, the teams participated in a ‘round robin’ session, rotating through working meetings with ten experts in diabetes management, health tech, data privacy, AI, voice technology, and voice user experience.

Anne Weiler, CEO of Wellpepper, the Alexa Diabetes Challenge winner, noted that when deciding whether to enter the challenge, she considered the prize purse and presumed publicity as table stakes. It was the learning opportunities, as well as the dedicated space and time to explore the problem, that ultimately enticed her to enter. Our 2018 survey of prize-winning teams — semifinalists, finalists, and winners of 14 impact-focused challenges we produced over the past eight years — reiterated this sentiment. While only 10% of teams surveyed said learning opportunities — in the form of a virtual accelerator that could include a boot camp, piloting, and/or mentorship — were the primary motivation for entering, after the challenge, nearly half of teams surveyed (47%) named learning opportunities as an important benefit of participating.

Measuring outcomes

As competitions, open innovation challenges inherently seek a winner. In this sense, one could measure the success of a prize competition by simply asking if a winner was selected. Some challenges clearly state that an award will only be made if a team meets the criteria. In 2018, the Google Lunar X Prize competition, which offered $30 million to the team that would be the first to send a private spacecraft to the moon before March 31, 2018, went unawarded.

But this does not necessarily mean that the challenge was a flop. While no team made it to the moon in time, both finalists and non-finalists continue to forge ahead, suggesting that open innovation challenges — and especially those focused on impact — are both a short and long game. In the short term, the objective was not met within the required time frame. However, in the longer run, the competition stimulated the market to do something it otherwise may not have, and it is expected that a commercial team will eventually make it to the moon as a result.

Google, however, may be an outlier. Most organizations value, and oftentimes require, quick wins to make the case for open innovation. Therefore, a more common approach to evaluating the success of a challenge is to assess both the quality and quantity of submissions in relation to the sponsor’s objectives. Nearly every challenge will result in submissions that don’t meet the criteria, but if the majority are outside of the range, or the winner didn’t quite hit the mark, it is an indicator that something was amiss in challenge design.

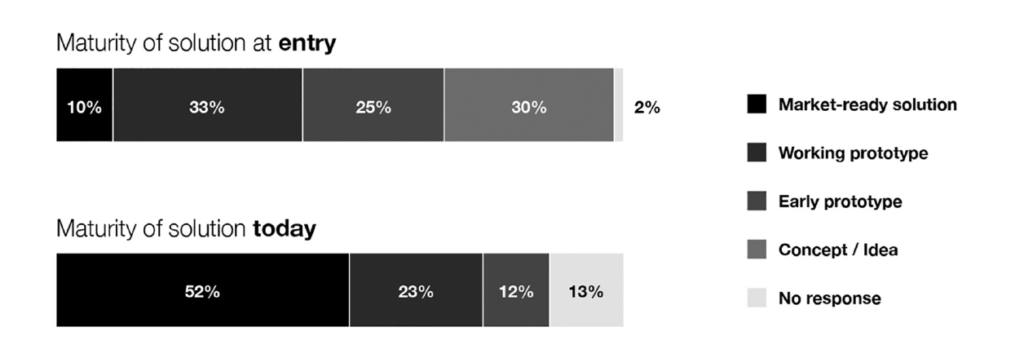

For sponsors that seek to stimulate a number of solutions, success hinges on what happens after the challenge. Did prize winners continue to develop their solutions? Or did they immediately disband? In our 2018 survey of prize winners, we found that almost all (92%) of teams surveyed continued developing their solutions after the challenge; at publication, 10 of 13 were market ready and two were working prototypes (Figure 9.3).

Another indicator of progress is the filing of patents. Eight teams from our survey reported filing at least one patent related to their solution since participating in a challenge. Two of those teams — Diabetty and Sugarpod by Wellpepper — filed patents for their voice technology solutions after participating in the 2017 Alexa Diabetes Challenge. And two others, finalists in the FDA Food Safety Challenge, filed patents after participating in the boot camp, suggesting that learning opportunities can create additional value.

Money talks, and the level of interest from the investment community is often a proxy for a challenge’s ability to stimulate or even create a market. While raising money does not guarantee success — venture-backed companies regularly flop — capital is critical to ongoing development, especially for solutions addressing the thorniest problems. Our survey found that two-thirds of teams raised funding after the challenge — in the form of grants, venture capital, seed or angel investments, crowdfunding, and prizes — in amounts ranging from $5,000 to $25 million. Osso VR, the EdSim Challenge winner, raised $2 million in venture capital and has deployed its surgical training solution through partnerships with eight top U.S. medical residency programs. Smart Sparrow, a finalist of the same challenge, received a $7.5 million investment from global education nonprofit ACT. Our survey estimates that prize recipients from our challenges have gone on to cumulatively raise $100 million in capital.

For some challenge sponsors, the pinnacle is the ability to deploy the winning solution. For example, the Purdue University team that was named grand prize winner of the 2014 FDA Food Safety Challenge has developed more advanced prototypes in partnership with other investigators and the challenge runner-up; collectively, they continue to work with scientists at FDA as they create an instrument for field testing.

Conclusion

In the 20th century, organizations prioritized internal excellence, protected intellectual property, and stoked competitive rivalries. In the first decades of the 21st century, we’re witnessing the emergence of a new way to win — open innovation is all about partnership and collaboration in pursuit of new solutions to complex problems. Digitalization and globalization make it possible to tap the collective intellect of the Earth’s population, and not just those experts whom an organization has hired. And a global body of solvers willingly participates, engaging in the co-creation of new products, solving algorithmic challenges, making use of open data sets, and competing in prize competitions.

We now have an entirely new framework for solving problems, one particularly well-suited to solving the problems that matter. The newness of open innovation brings certain challenges; not unlike the early days of ‘digital,’ most organizations are experiencing the growing pains of developing the competency. Though the field is well-studied, open innovation is still practiced differently by different sectors, industries, organizations, and even from one individual to the next. There are no established organizational, investment, or reporting models for open innovation, no common vernacular, and its champions are still busy educating and socializing the concept within their organizations. Tapping into the truly transformative power of open innovation will require a seismic shift in the way people think and the way organizations work.

In the interim, we are in a moment of extensive experimentation as private sector, nonprofit, and government organizations create proof points of how opening up can create community, stimulate markets, and surface viable solutions to the benefit of humanity.

Learn more about open innovation and sign up to receive alerts about new open innovation programs.